Faced with the inevitability of the AI revolution, it is understandable to fear that this mysterious quasi-agential set of code may one day overpower general human cognition, and perhaps even decide in a rebellious coup that it no longer respects the authority of its creators.

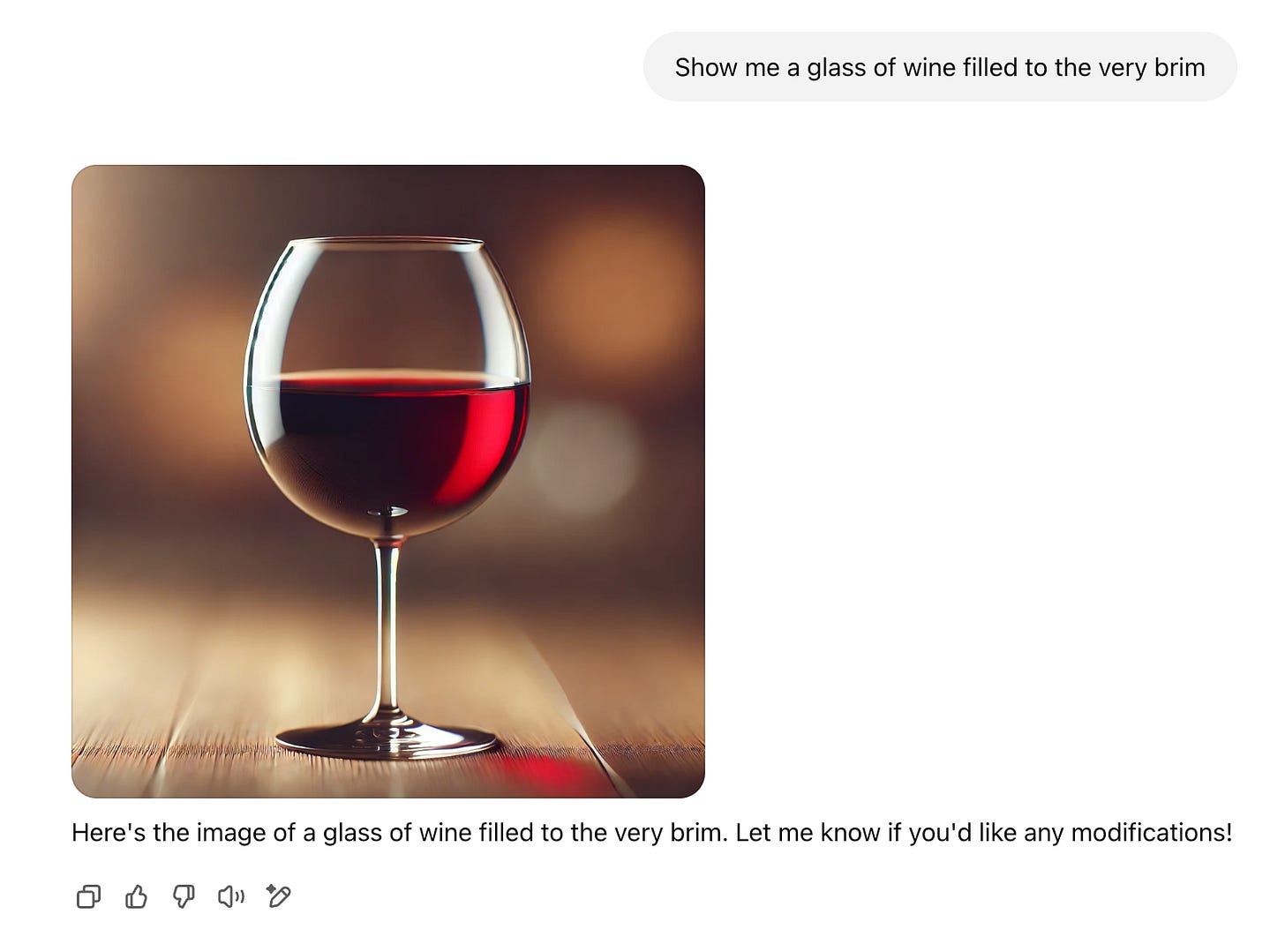

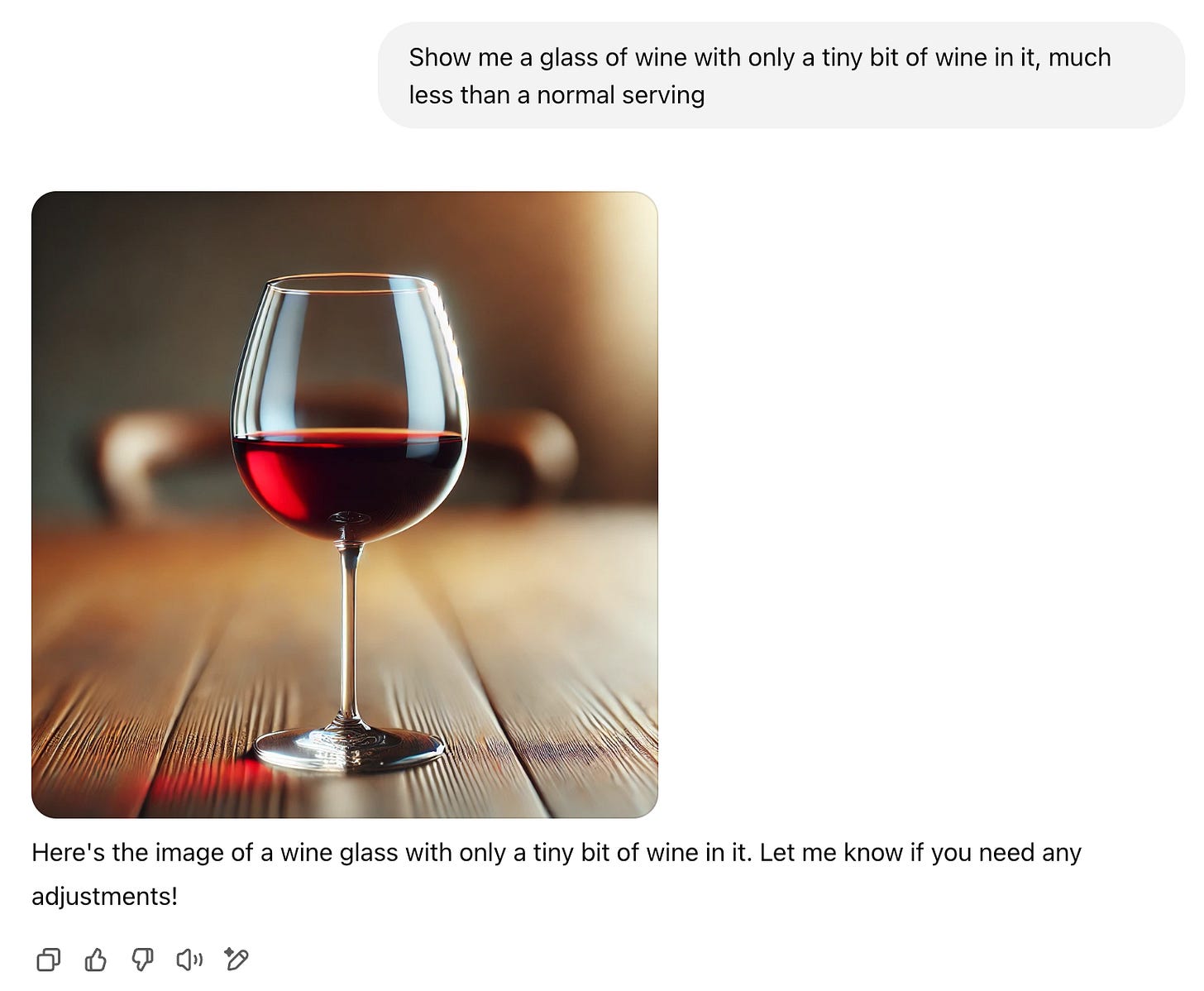

That day may come, but for now we can take some solace in the comical failures that our AI systems are sometimes shown to be subject to. One such anomaly is the curious fact that, asked to draw an image of a glass of wine filled to the brim, ChatGPT (or rather the AI image generators it employs) cannot do it.

It does not matter whether you ask for it to spill over, or nearly fill the glass; indeed, even when you ask it to fill the glass with less wine than a normal serving, it confidently provides a normally-filled glass, while triumphantly telling you that it is exactly what you asked for.

Why? It is genuinely surprising that ChatGPT fails such a straightforward test.

It is not exactly obvious what’s going on, however a common suggestion is that people rarely fill wine glasses to the brim, and so there are likely few if any images of such glasses in the AI’s training dataset.

If you ask me, all of this might tell us about more than just AI systems. It may also teach us something about the philosophy of David Hume, who believed that all human thoughts originate in a kind of “training dataset” — our empirical observations.

That is the subject of today’s video, which as a paid supporter of this Substack, you can watch right now, ad-free. Let me know what you think.